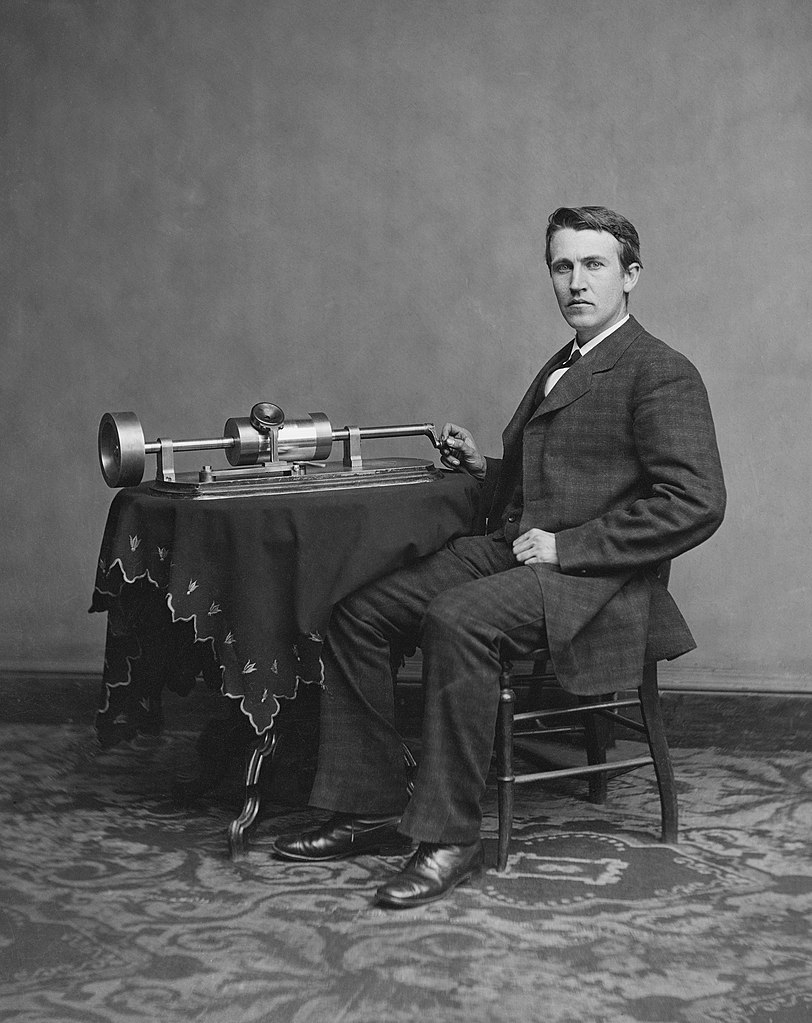

Edison and phonograph circa 1877 / Public domain, Wikimedia Commons

“To count to ten, I used me fingers. If I needed more,

“By getting my shoes and socks off, I could count to twenty-four…”

— Rolf Harris, “Jake the Peg”

Sometime in the late 19 th Century (December 1877 in fact), Thomas Alva Edison used a needle attached to a diaphragm to write the vibrations of his voice onto a sheet of tin foil stuck around a rotating cylinder. When he reversed the process, the needle caused the diaphragm to vibrate, producing a sound that was very similar to his voice saying the words that he had spoken.

That was the start of what we today call analogue recordings. The word analogue (or as the Americans spell it, analog), comes from the Greek word analogos which means something that is similar to something else. Analogue recordings have gotten better over time, but what they have always been is something similar to the original, but never an exact copy. Most of us know that two photographs taken with two cameras in the same place would look very similar, but would never be exactly the same. And two recordings made with two tape recorders in the same place at the same time would sound very similar, but would never be exactly the same.

We’ve lived happily with analogue recordings for more that 100 years, thank you very much, and most of us would be quite happy to continue doing so. But for many industries, analogue recordings have limitations. One of the biggest problems with analogue is what we refer to as generational loss. We already know that an analogue recording is similar to the original picture and sound, but is not an exact copy. So, a copy of the recording is even less similar to the original. And as we make copies of copies of copies, the quality gets worse and worse. In other words, each generation is less like its ancestor than its parent was. For most of us, this has not been a problem, as we normally copied our records onto tape for playing in our cars, or had photographs blown up and retouched, and we were quite happy with the quality of the result.

But what about the scientist who needs an accurate recording of an earth tremor to be able to study the pattern of earthquakes? What about the doctor who needs an accurate picture of the inside of a cell to test for cancer? What about the meteorologist who needs an accurate picture of the earth from a satellite to be able to calculate weather patterns?

In the late 1960s, scientists had found a solution for recording sound faithfully. They took a sound recording, measured its amplitude (volume) and frequency (pitch), and recorded those as numbers onto tape. These measurements were taken at the same time across the entire audio spectrum that human beings are able to hear. By converting those numbers from the tape back into sound, they were able to produce for the first time a recording that would not lose quality. And because numbers were being recorded, these became known as digital recordings.

Early digital recorders were bulky expensive things, but they laid the groundwork for the biggest leap in audio technology for the man in the street little more than ten years later. The digital audio disk was first known as DAD, but soon became known as the compact disk or CD for short. It worked by taking the numbers recorded by the digital tape recorders and burning these onto the metal surface of the CD. When the CD is later scanned by a laser, the pulses of light reflected from the surface are converted to numbers, and from numbers back to sound.

But what about pictures? Scientists already understood the theory of how to store pictures in digital form. All we have to do is divide a picture into dots. For each dot, we need to know how bright it is, and what is its colour. Both the brightness and colour values are numbers. If we accurately read and store those numbers, we can recreate the original picture.

But the problem was, and still is, that there is a lot more information needing to be recorded for a picture than for a sound clip because there are some 16 million colours that can be discerned by the human eye. So a picture the size of a small computer screen, if stored digitally, would be 640 x 480 dots by 512 in size – 512 is the number needed to store the colour and brightness information. So it would take almost 19 megabytes on hard disk, or about 13 floppy disks, to store just one picture. Considering that the average hard disk through the 1980’s was about 20 megabytes in capacity, this was not something that could be tried at home.

The first real breakthrough in storing images came from a 1980’s online computer service called Compuserve who were looking for a way to transfer pictures over very slow phone lines. (Remember that the World Wide Web did not exist in the 1980’s.) Compuserve suggested, firstly, that pictures be limited to 256 colours out of the more than 16 million. This meant that the most space these pictures could take up on hard disk would be about 300 kilobytes. Secondly, they suggested that each picture include a palette (which they called a colour map) of the 256 colours needed to draw that picture. Finally, they suggested that the picture information be compressed so that it takes up less space on disk. They called this format the Graphics Interchange Format or GIF for short.

For the next ten years or so, GIF reigned supreme on the Internet as the way to store pictures. But two things stood against it. Firstly, real photographs need more than 256 colours if they are to be a real alternative to film. Secondly, Compuserve decided that since it owned the GIF format patent, everyone who used it should pay royalties. The race was on to find an alternative, and a task force was set up by universities. They called themselves the Joint Photographic Experts Group.

The task team approached the question of how to store pictures somewhat differently to the way previous scientists had looked at the problem. The human mind, they argued, needs to be provided with minimal information to complete a picture, (which is why we love doodling and cartoon characters). So, why not store picture information the same way? Toss out most of the information when storing the picture, but then have the computer understand how to fill in the gaps from a human point of view. (This type of thinking is sometimes referred to as fuzzy logic.)

The result was the JPEG standard which allows us to store pictures at 100:1 compression at low quality going up to a worst case of 20:1 compression at high quality. And with the invention of faster computers, we could now have the computer calculate how to draw the picture almost immediately.

But we are mainly interested in video, not still pictures. So, let’s leave that aside for now and look at how movies work. The human eye processes information 24 times every second. So, if we can set up a camera to take 24 pictures every second, and then play back those pictures at the same speed, the human eye will see it as one uninterrupted moving picture. Anything slower than 24 pictures or frames per second will appear jumpy – as was the case with the first movies – but we can still go as low as 15 frames per second without confusing the brain too much. Anything faster than 24 frames per second will look increasingly smooth as it speeds up.

How do we get a moving picture onto a television screen? Well, the back of the TV screen tube has a heated filament, almost exactly like a light bulb. This filament, called the cathode, gives off electrons. (This is why most TV screens and computer screens are also referred to as CRTs which stands for “Cathode Ray Tube.) Sitting between the cathode and the screen is a charged piece of metal called an anode. The cathode and the anode are exactly like the terminals on a car battery – one has a positive charge, and one has a negative charge. And like a car battery, sparks (electrons) will fly between negative and positive if they are brought together.

If there was only one anode, the electron leaving the filament would simply fly across there and do nothing. But a CRT has more than one anode. The result of this is that because both anodes attract the electron at the same time, the electron flies between them and hits the TV screen. Think of the anodes as a pair of magnets, and the electron as a pin dropped between them. By varying the strength of the magnet (in this case, the charge on the anode), we can steer the electron to hit the TV screen exactly where we want it to. The TV screen is coated with phosphorescent substance, meaning it glows when an electron hits it. By steering the electrons across the screen, we can draw lines of tiny dots across the screen starting at the top left and ending at the bottom right. By varying the intensity of the electron, we can make the dots on screen lighter or darker. If we look closely at the TV screen, we can see the dots, but because we are not close to the TV, our eyes mesh the dots together to form a picture.

On a colour TV screen, the phosphorescent bits are made up of three dots placed close to each other, and each dot glows in red or green or blue. Because any colour can be made by combining red, green, and blue, our eyes are fooled into believing that the entire spectrum of colours is on display for us. If you take a magnifying glass to your TV screen, you will see these dots. And if you were to count these, you would find your TV screen has about 768 dots going across, and up to 625 lines of dots going down. At least, that is the case in the PAL world used by most of Europe, most of Africa, and most of Australasia. The picture on a PAL TV set updates 25 times per second, which is of course faster than the 24 frames per second needed by the human eye.

So, let’s combine what we know about TV transmission, and what we know about storing pictures in digital format. Now that we have an efficient way to store pictures (JPEG), it would then seem to be easy enough to create digital video. All one would have to do is take each frame, store it as a JPEG file, and then replay it at a rate of 25 frames per second. We would simply need to have a computer fast enough to be able to translate 25 JPEGs per second.

Scientists did exactly this, and developed a standard known as Motion JPEG or M-JPEG for short. Many video servers were developed in the 1990’s using this standard. e.tv’s servers in South Africa use this technology.

But while M-JPEG did its job well, it was still not widely adopted. It still needed vast quantities of disk space, and it was inefficient because it treated each frame as a separate picture. Think of a recent movie you have seen – apart from the fast action sequences, not much changes in the story within the space of a second, and it would make more sense to apply fuzzy logic across many frames at a time. So, a new task team was set up called the Moving Picture Experts Group.

The MPEG-1 standard was developed by this group, and used exactly the process we have just described. Several contiguous frames (called slices) would be encoded and compressed at once. The audio would also be compressed and included in the file at the same time. The result was a digital file format that produced a TV picture and sound at least as good as a VHS video recorder. The MPEG-1 format has been most widely used in Video CDs or VCDs. This format has been widely adopted in the Far East, but never became popular in the rest of the world.

As faster computers developed, the MPEG-1 standard evolved and MPEG-2 was born. This format cranked the picture quality to the same level as television broadcast camera quality, and allowed for simultaneous encoding of CD quality audio in surround sound, multiple language audio tracks, and multiple subtitle tracks. MPEG-2 was a huge success and became the format used in DVD Video and also in digital satellite decoders as used by DSTV and Vivid.

That brings us almost up to the present to around 1996. Filmmaker George Lucas was about to release the trailer for his much anticipated prequel to the Star Wars Trilogy. He threw out a challenge to the computer industry asking for a video format that would allow him to distribute his video trailer on the internet with exceptional picture and sound quality. It was Apple Computer who won the challenge with their QuickTime format. The Star Wars Phantom Menace trailer became the most downloaded item in history, and Apple established QuickTime as the dominant format for storing professional-quality video files.

But Apple went on to develop an interface which they called Firewire. This was a standard for computers to be able to talk to and control video cameras. And in order that the standard be used as widely as possible, Apple donated the standard to the Institute of Electrical and Electronic Engineers (IEEE) where it has since become known as standard 1394. Sony adopted the standard and incorporated this into their cameras, where they refer to it as the i.Link port.

Today, all we need to do in order to produce broadcast quality video is to have a camera and computer connected via a 1394 cable, and video editing software.

Apple has since made the QuickTime file format available to the Moving Picture Experts Group where it has become known as MPEG-4. This new standard will allow for better compression and retrieval, but also provides for sharing of 3D objects, multiple camera angles, and support for new miniature video technology, such as 3G cellphones.

It’s very likely that ten years from now, we will look back at a DVD and ask, “what’s that?”